Publications

Published Papers

♫: Equal contribution.

☨: Corresponding author.

-

-

Rethinking Multimodal Learning from the Perspective of Mitigating Classification Ability Disproportion. [pdf][github]

Qing-Yuan Jiang, Longfei Huang, and Yang Yang.

Proceedings of the 39th Conference on Neural Information Processing Systems (NeurIPS). Oral (1.46%). 2025

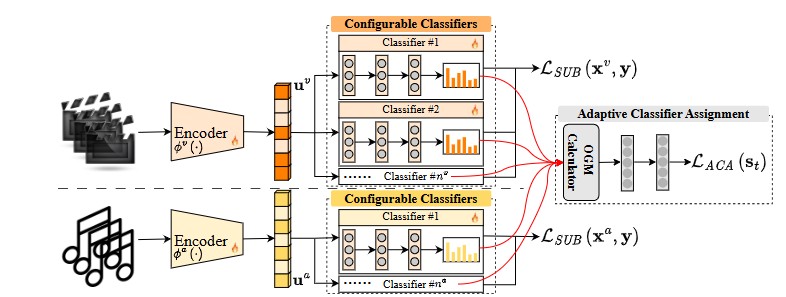

ABSTRACT BIB Multimodal LearningMultimodal learning (MML) is significantly constrained by modality imbalance,leading to suboptimal performance in practice. While existing approaches primarily focus on balancing the learning of different modalities to address this issue, they fundamentally overlook the inherent disproportion in model classification ability, which serves as the primary cause of this phenomenon. In this paper, we propose a novel multimodal learning approach to dynamically balance the classification ability of weak and strong modalities by incorporating the principle of boosting. Concretely, we first propose a sustained boosting algorithm in multimodal learning by simultaneously optimizing the classification and residual errors. Subsequently, we introduce an adaptive classifier assignment strategy to dynamically facilitate the classification performance of the weak modality. Furthermore, we theoretically analyze the convergence property of the cross-modal gap function, ensuring the effectiveness of the proposed boosting scheme. To this end, the classification ability of strong and weak modalities is expected to be balanced, thereby mitigating the imbalance issue. Empirical experiments on widely used datasets reveal the superiority of our method through comparison with various state-of-the-art (SOTA) multimodal learning baselines. The source code is available at https://github.com/njustkmg/NeurIPS25-AUG.

-

-

Balance-aware Sequence Sampling Makes Multimodal Learning Better. [pdf][github][poster][slide]

Zhihao Guan, Qing-Yuan Jiang☨, and Yang Yang.

Proceedings of the 34rd International Joint Conference on Artificial Intelligence (IJCAI). 2025

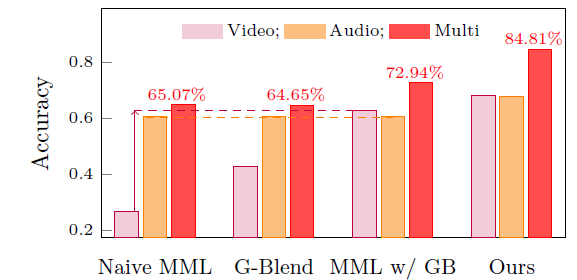

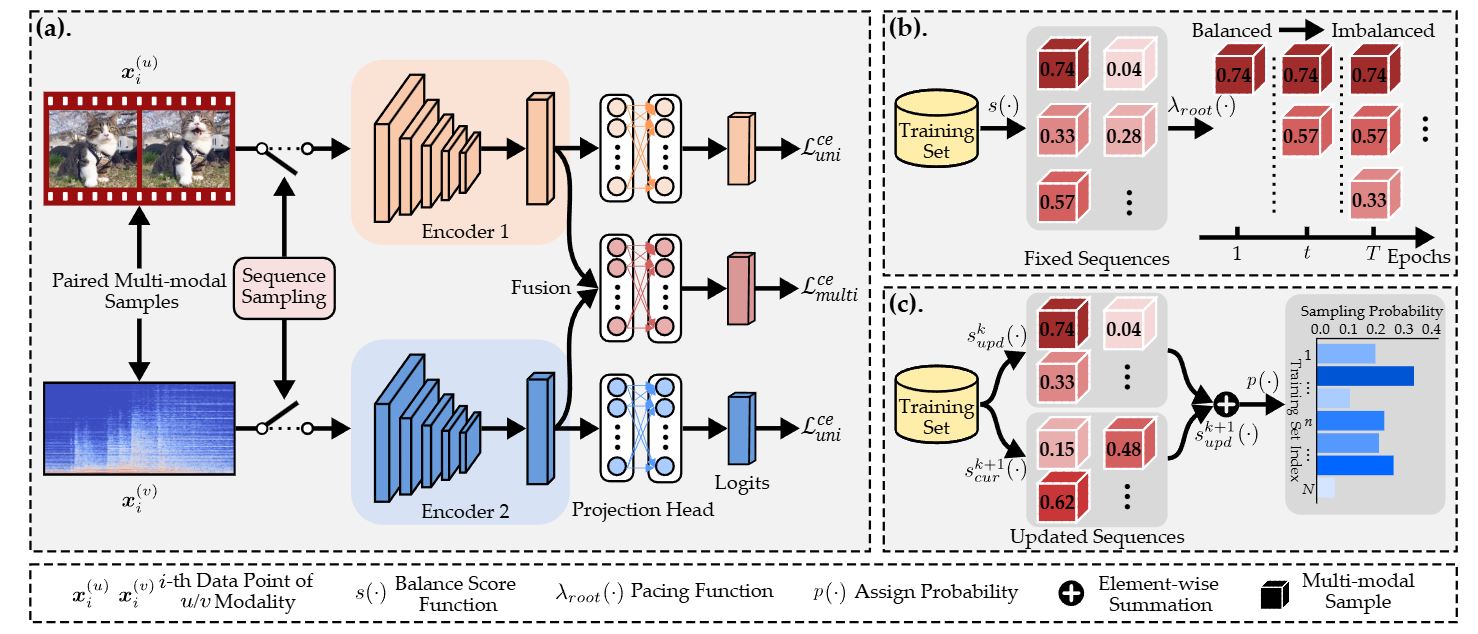

ABSTRACT BIB Multimodal LearningMultimodal learning (MML) is frequently hindered by modality imbalance, leading to suboptimal performance in real-world applications. To address this issue, existing approaches primarily focus on rebalancing MML from the perspective of optimization or architecture design. However, almost all existing methods ignore the impact of sample sequences, i.e., an inappropriate training order tends to trigger learning bias in the model, further exacerbating modality imbalance. In this paper, we propose Balance-aware Sequence Sampling (BSS) to enhance the robustness of MML. Specifically, we first define a multi-perspective measurer to evaluate the balance degree of each sample in terms of correlation and information criteria. Via this evaluation, we employ a heuristic scheduler based on curriculum learning (CL) that incrementally provides training subsets, progressing from balanced to imbalanced samples to alleviate the imbalance. Moreover, we propose a learning-based probabilistic sampling method to dynamically update the training sequence in a more fine-grained manner, further improving MML performance. Extensive experiments on widely used datasets demonstrate the superiority of our method compared with state-of-the-art (SOTA) baselines. The code is available at https://github.com/njustkmg/IJCAI25-BSS.

-

-

Towards Equilibrium: An Instantaneous Probe-and-Rebalance Multimodal Learning Approach. [pdf][github][poster][slide]

Yang Yang, Xixian Wu, and Qing-Yuan Jiang☨.

Proceedings of the 34rd International Joint Conference on Artificial Intelligence (IJCAI). 2025

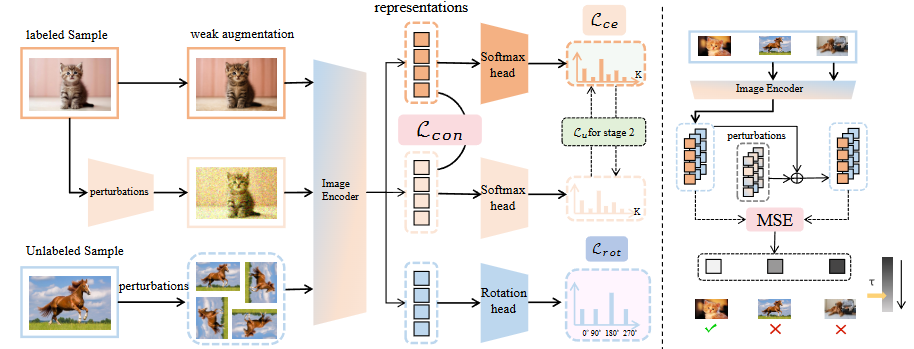

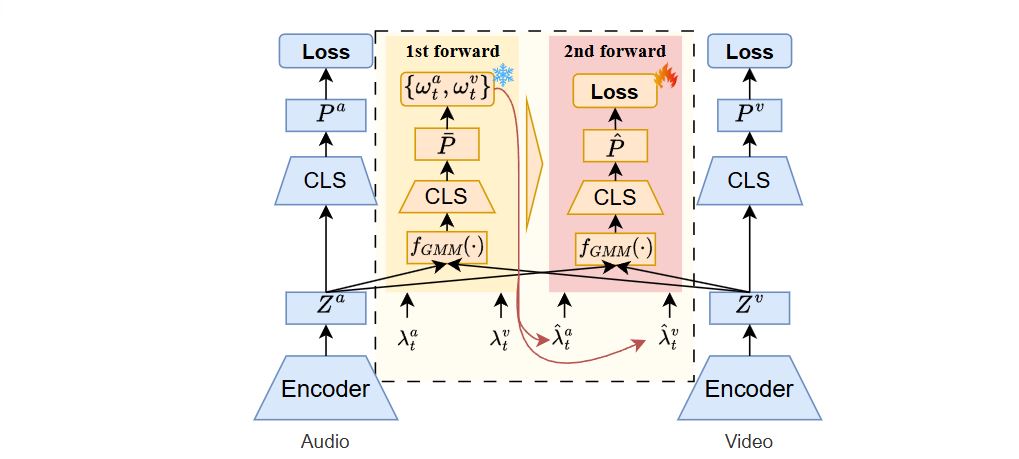

ABSTRACT BIB Multimodal LearningThe multimodal imbalance problem has been extensively studied to prevent the undesirable scenario where multimodal performance falls below that of unimodal models. However, existing methods typically assess the strength of modalities and perform learning simultaneously under the imbalanced status. This deferred strategy fails to rebalance multimodal learning instantaneously, leading to performance degeneration. To address this, we propose a novel multimodal learning approach, termed instantaneous probe-and-rebalance multimodal learning (IPRM), which employs a two-pass forward method to first probe (but not learn) and then perform rebalanced learning under the balanced status. Concretely, we first employ the geodesic multimodal mixup (GMM) to incorporate fusion representation and probe modality strength in the first forward phase. Then the weights are instantaneously recalibrated based on the probed strength, facilitating balanced training via the second forward pass. This process is applied dynamically throughout the entire training process. Extensive experiments reveal that our proposed IPRM outperforms all baselines, achieving state-of-the-art (SOTA) performance on numerous widely used datasets. The code is available at https://github.com/njustkmg/IJCAI25-IPRM.

-

-

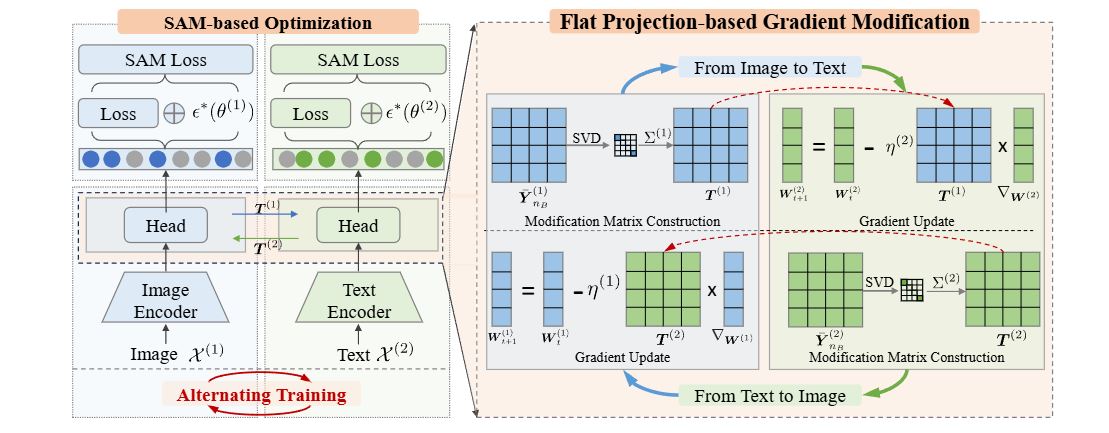

Interactive Multimodal Learning via Flat Gradient Modification. [pdf][github][poster][slide]

Qing-Yuan Jiang, Zhouyang Chi, and Yang Yang.

Proceedings of the 34rd International Joint Conference on Artificial Intelligence (IJCAI). 2025

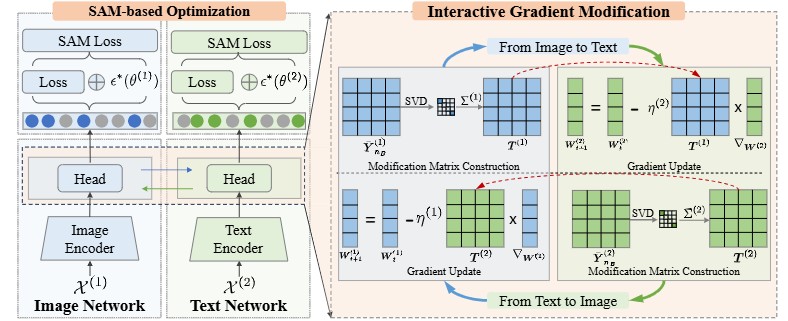

ABSTRACT BIB Multimodal LearningDue to the notorious modality imbalance phenomenon, multimodal learning (MML) struggles to achieve satisfactory performance. Recently, multimodal learning with alternating unimodal adaptation (MLA) has been proven effective in mitigating the interference between modalities by capturing interaction through orthogonal projection, thus relieving modality imbalance phenomenon to some extent. However, the projection strategy orthogonal to the original space can lead to poor plasticity as the alternating learning proceeds, thus affecting model performance. To address this issue, in this paper, we propose a novel multimodal learning method called interactive MML via flat gradient modification (IGM) by employing a flat gradient modification strategy to enhance interactive MML. Specifically, we first employ a flat projection-based gradient modification strategy that is independent to the original space, aiming to avoid the poor plasticity issue. Then we introduce the sharpness-aware minimization (SAM)-based optimization strategy to fully exploit the flatness of the learning objective and further enhance interaction during learning. To this end, the plasticity problem can be avoided and the overall performance is improved. Extensive experiments on widely used datasets demonstrate that IGM outperforms various state-of-theart (SOTA) baselines, achieving superior performance. The source code is available at https://github.com/njustkmg/IJCAI25-IGM.

-

-

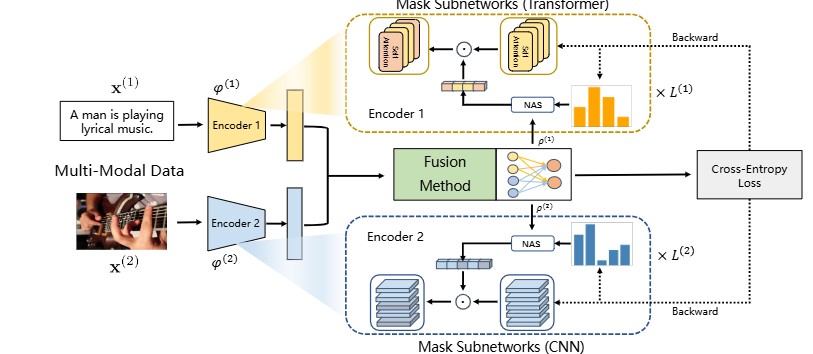

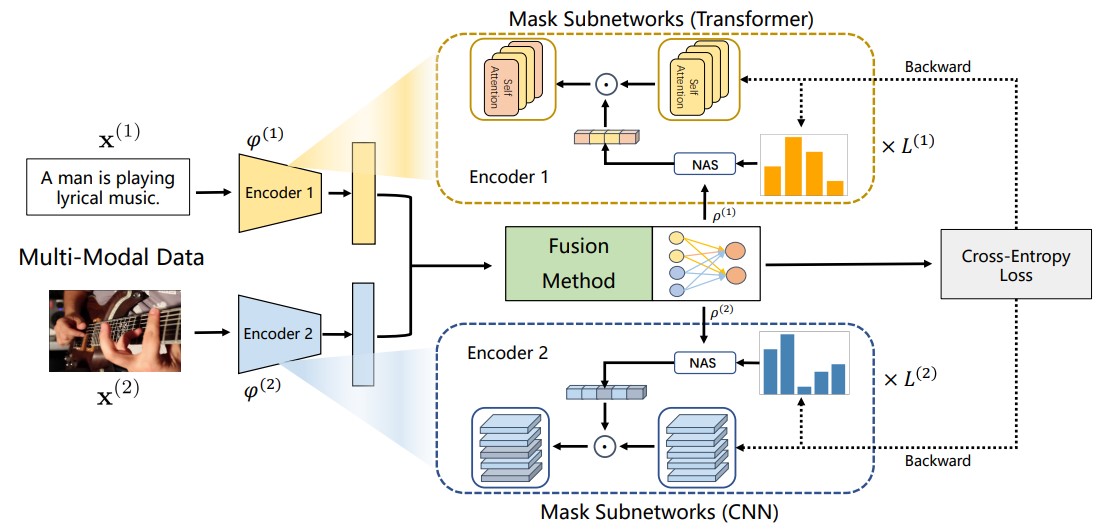

Learning to Rebalance Multi-Modal Optimization by Adaptively Masking Subnetworks. [pdf][github]

Yang Yang, Hongpeng Pan, Qing-Yuan Jiang, Yi Xu, and Jinhui Tang.

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI). 2025

ABSTRACT BIB Multimodal LearningMulti-modal learning aims to enhance performance by unifying models from various modalities but often faces the “modality imbalance” problem in real data, leading to a bias towards dominant modalities and neglecting others, thereby limiting its overall effectiveness. To address this challenge, the core idea is to balance the optimization of each modality to achieve a joint optimum. Existing approaches often employ a modal-level control mechanism for adjusting the update of each modal parameter. However, such a global-wise updating mechanism ignores the different importance of each parameter. Inspired by subnetwork optimization, we explore a uniform sampling-based optimization strategy and find it more effective than global-wise updating. According to the findings, we further propose a novel importance sampling-based, element-wise joint optimization method, called Adaptively Mask Subnetworks Considering Modal Significance (AMSS). Specifically, we incorporate mutual information rates to determine the modal significance and employ non-uniform adaptive sampling to select foreground subnetworks from each modality for parameter updates, thereby rebalancing multi-modal learning. Additionally, we demonstrate the reliability of the AMSS strategy through convergence analysis. Building upon theoretical insights, we further enhance the multi-modal mask subnetwork strategy using unbiased estimation, referred to as AMSS+. Extensive experiments reveal the superiority of our approach over comparison methods.

-

-

Facilitating Multimodal Classification via Dynamically Learning Modality Gap. [pdf][github][poster][video][slide]

Yang Yang, Fengqiang Wan, Qing-Yuan Jiang☨, and Yi Xu.

Proceedings of the 38th Conference on Neural Information Processing Systems (NeurIPS). 2024

ABSTRACT BIB Multimodal LearningMultimodal learning falls into the trap of the optimization dilemma due to the modality imbalance phenomenon, leading to unsatisfactory performance in real applications. A core reason for modality imbalance is that the models of each modality converge at different rates. Many attempts naturally focus on adjusting learning procedures adaptively. Essentially, the reason why models converge at different rates is because the difficulty of fitting category labels is inconsistent for each modality during learning. From the perspective of fitting labels, we find that appropriate positive intervention label fitting can correct this difference in learning ability. By exploiting the ability of contrastive learning to intervene in the learning of category label fitting, we propose a novel multimodal learning approach that dynamically integrates unsupervised contrastive learning and supervised multimodal learning to address the modality imbalance problem. We find that a simple yet heuristic integration strategy can significantly alleviate the modality imbalance phenomenon. Moreover, we design a learning-based integration strategy to integrate two losses dynamically, further improving the performance. Experiments on widely used datasets demonstrate the superiority of our method compared with state-of-the-art (SOTA) multimodal learning approaches. The code is available at https://github.com/njustkmg/NeurIPS24-LFM.

-

-

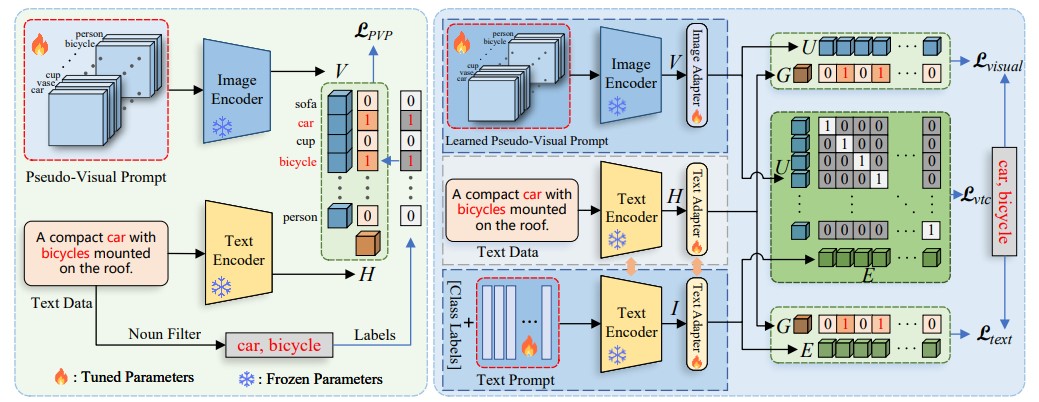

TAI++: Text as Image for Multi-Label Image Classification by Co-Learning Transferable Prompt. [pdf][github]

Xiangyu Wu, Qing-Yuan Jiang, Yifeng Wu, Qingguo Chen, Yang Yang, and Jianfeng Lu

Proceedings of the 33rd International Joint Conference on Artificial Intelligence (IJCAI). 2024

ABSTRACT BIB Multimodal LearningThe recent introduction of prompt tuning based on pre-trained vision-language models has dramatically improved the performance of multi-label image classification. However, some existing strategies that have been explored still have drawbacks, i.e., either exploiting massive labeled visual data at a high cost or using text data only for text prompt tuning and thus failing to learn the diversity of visual knowledge. Hence, the application scenarios of these methods are limited. In this paper, we propose a pseudo-visual prompt (PVP) module for implicit visual prompt tuning to address this problem. Specifically, we first learn the pseudo-visual prompt for each category, mining diverse visual knowledge by the well-aligned space of pre-trained vision-language models. Then, a colearning strategy with a dual-adapter module is designed to transfer visual knowledge from pseudovisual prompt to text prompt, enhancing their visual representation abilities. Experimental results on VOC2007, MS-COCO, and NUSWIDE datasets demonstrate that our method can surpass state-ofthe-art (SOTA) methods across various settings for multi-label image classification tasks.

-

-

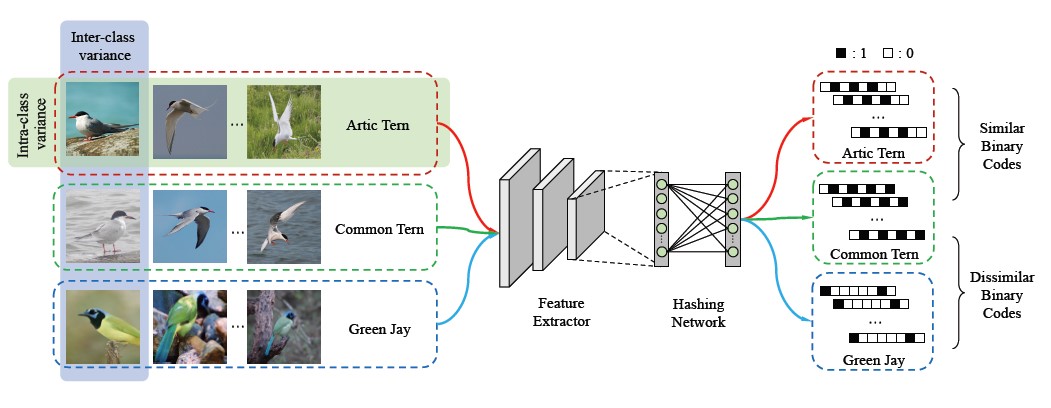

SEMICON: A Learning-to-hash Solution for Large-scale Fine-grained Image Retrieval. [pdf]

Yang Shen, Xuhao Sun, Xiu-Shen Wei, Qing-Yuan Jiang, and Jian Yang

Proceedings of the European Conference on Computer Vision (ECCV). 2022

ABSTRACT BIB Learning2Hash Fine-Grained Image RetrievalIn this paper, we propose Suppression-EnhancingMask based attention and Interactive Channel transformatiON (SEMICON) to learn binary hash codes for dealing with large-scale fine-grained image retrieval tasks. In SEMICON, we first develop a suppression-enhancing mask (SEM) based attention to dynamically localize discriminative image regions. More importantly, different from existing attention mechanism simply erasing previous discriminative regions, our SEM is developed to restrain such regions and then discover other complementary regions by considering the relation between activated regions in a stage-by-stage fashion. In each stage, the interactive channel transformation (ICON) module is afterwards designed to exploit correlations across channels of attended activation tensors. Since channels could generally correspond to the parts of finegrained objects, the part correlation can be also modeled accordingly, which further improves fine-grained retrieval accuracy. Moreover, to be computational economy, ICON is realized by an efficient two-step process. Finally, the hash learning of our SEMICON consists of both globaland local-level branches for better representing fine-grained objects and then generating binary hash codes explicitly corresponding to multiple levels. Experiments on five benchmark fine-grained datasets show our superiority over competing methods. Codes are available at https://github.com/NJUST-VIPGroup/SEMICON.

-

-

ExchNet: A Unified Hashing Network for Large-Scale Fine-Grained Image Retrieval. [pdf]

Quan Cui, Qing-Yuan Jiang♫, Xiu-Shen Wei, Wu-Jun Li, and Osamu Yoshie

Proceedings of the European Conference on Computer Vision (ECCV). 2020

ABSTRACT BIB Learning2Hash Fine-Grained Image RetrievalRetrieving content relevant images from a large-scale fine-grained dataset could suffer from intolerably slow query speed and highly redundant storage cost, due to high-dimensional real-valued embeddings which aim to distinguish subtle visual differences of fine-grained objects. In this paper, we study the novel fine-grained hashing topic to generate compact binary codes for fine-grained images, leveraging the search and storage effciency of hash learning to alleviate the aforementioned problems. Specifically, we propose a unified end-to-end trainable network, termed as ExchNet. Based on attention mechanisms and proposed attention constraints, ExchNet can firstly obtain both local and global features to represent object parts and the whole fine-grained objects, respectively. Furthermore, to ensure the discriminative ability and semantic meaning's consistency of these part-level features across images, we design a local feature alignment approach by performing a feature exchanging operation. Later, an alternating learning algorithm is employed to optimize the whole ExchNet and then generate the final binary hash codes. Validated by extensive experiments, our ExchNet consistently outperforms state-of-the-art generic hashing methods on five fine-grained datasets. Moreover, compared with other approximate nearest neighbor methods, ExchNet achieves the best speed-up and storage reduction, revealing its efficiency and practicality.

-

-

Discrete Latent Factor Model for Cross-Modal Hashing. [pdf][github]

Qing-Yuan Jiang, and Wu-Jun Li.

IEEE Transactions on Image Processing (TIP). 2019

ABSTRACT BIB Learning2Hash Cross-Modal RetrievalDue to its storage and retrieval efficiency, cross-modal hashing (CMH) has been widely used for cross-modal similarity search in many multimedia applications. According to the training strategy, existing CMH methods can be mainly divided into two categories: relaxation-based continuous methods and discrete methods. In general, the training of relaxation-based continuous methods is faster than discrete methods, but the accuracy of relaxation-based continuous methods is not satisfactory. On the contrary, the accuracy of discrete methods is typically better than relaxation-based continuous methods, but the training of discrete methods is very time-consuming. In this paper, we propose a novel CMH method, called discrete latent factor model based cross-modal hashing (DLFH), for cross modal similarity search. DLFH is a discrete method which can directly learn the binary hash codes for CMH. At the same time, the training of DLFH is efficient. Experiments show that DLFH can achieve significantly better accuracy than existing methods, and the training time of DLFH is comparable to that of relaxation-based continuous methods which are much faster than existing discrete methods.

-

-

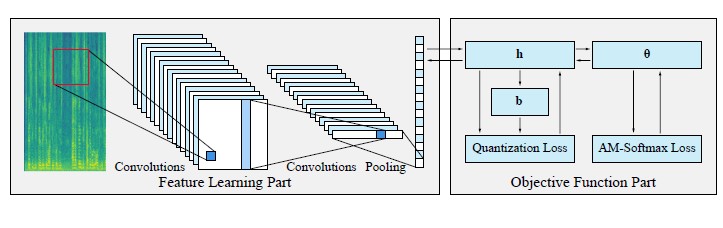

Deep Hashing for Speaker Identification and Retrieval. [pdf]

Lei Fan, Qing-Yuan Jiang, Ya-Qi Yu, and Wu-Jun Li.

Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH). 2019

ABSTRACT BIB Learning2HashSpeaker identification and retrieval have been widely used in real applications. To overcome the inefficiency problem caused by real-valued representations, there have appeared some speaker hashing methods for speaker identification and retrieval by learning binary codes as representations. However, these hashing methods are based on i-vector and cannot achieve satisfactory retrieval accuracy as they cannot learn discriminative feature representations. In this paper, we propose a novel deep hashing method, called deep additive margin hashing (DAMH), to improve retrieval performance for speaker identification and retrieval task. Compared with existing speaker hashing methods, DAMH can perform feature learning and binary code learning seamlessly by incorporating these two procedures into an end-to-end architecture. Experimental results on a large-scale audio dataset VoxCeleb2 show that DAMH can outperform existing speaker hashing methods to achieve state-of-the-art performance.

-

-

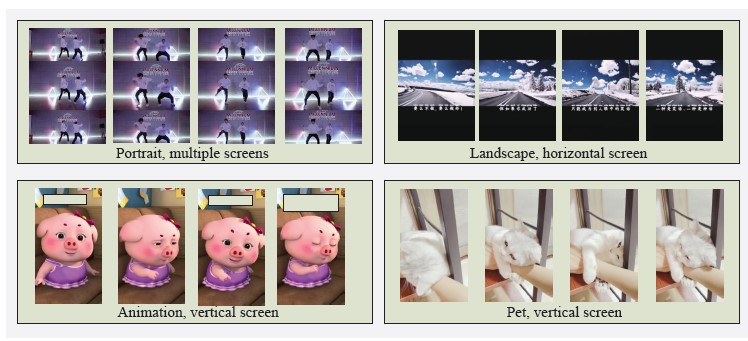

SVD: A Large-Scale Short Video Dataset for Near-Duplicate Video Retrieval. [pdf][github][project][poster]

Qing-Yuan Jiang, Yi He, Gen Li, Jian Lin, Lei Li, and Wu-Jun Li

Proceedings of the International Conference on Computer Vision (ICCV). 2019

ABSTRACT BIB Near-Duplicated Video RetrievalWith the explosive growth of video data in real applications, near-duplicate video retrieval (NDVR) has become indispensable and challenging, especially for short videos. However, all existing NDVR datasets are introduced for long videos. Furthermore, most of them are small-scale and lack of diversity due to the high cost of collecting and labeling near-duplicate videos. In this paper, we introduce a large-scale short video dataset, called SVD, for the NDVR task. SVD contains over 500,000 short videos and over 30,000 labeled videos of near-duplicates. We use multiple video mining techniques to construct positive/negative pairs. Furthermore, we design temporal and spatial transformations to mimic user-attack behavior in real applications for constructing more difficult variants of SVD. Experiments show that existing state-of-the-art NDVR methods, including real-value based and hashing based methods, fail to achieve satisfactory performance on this challenging dataset. The release of SVD dataset will foster research and system engineering in the NDVR area. The SVD dataset is available at https://svdbase.github.io.

-

-

Deep Discrete Supervised Hashing. [pdf][github]

Qing-Yuan Jiang, Xue Cui, and Wu-Jun Li.

IEEE Transactions on Image Processing (TIP). 2018

ABSTRACT BIB Learning2HashHashing has been widely used for large-scale search due to its low storage cost and fast query speed. By using supervised information, supervised hashing can significantly outperform unsupervised hashing. Recently, discrete supervised hashing and feature learning based deep hashing are two representative progresses in supervised hashing. On one hand, hashing is essentially a discrete optimization problem. Hence, utilizing supervised information to directly guide discrete (binary) coding procedure can avoid sub-optimal solution and improve the accuracy. On the other hand, feature learning based deep hashing, which integrates deep feature learning and hash-code learning into an end-to-end architecture, can enhance the feedback between feature learning and hash-code learning. The key in discrete supervised hashing is to adopt supervised information to directly guide the discrete coding procedure in hashing. The key in deep hashing is to adopt the supervised information to directly guide the deep feature learning procedure. However, most deep supervised hashing methods cannot use the supervised information to directly guide both discrete (binary) coding procedure and deep feature learning procedure in the same framework. In this paper, we propose a novel deep hashing method, called deep discrete supervised hashing (DDSH). DDSH is the first deep hashing method which can utilize pairwise supervised information to directly guide both discrete coding procedure and deep feature learning procedure and thus enhance the feedback between these two important procedures. Experiments on four real datasets show that DDSH can outperform other state-of-the-art baselines, including both discrete hashing and deep hashing baselines, for image retrieval.

-

-

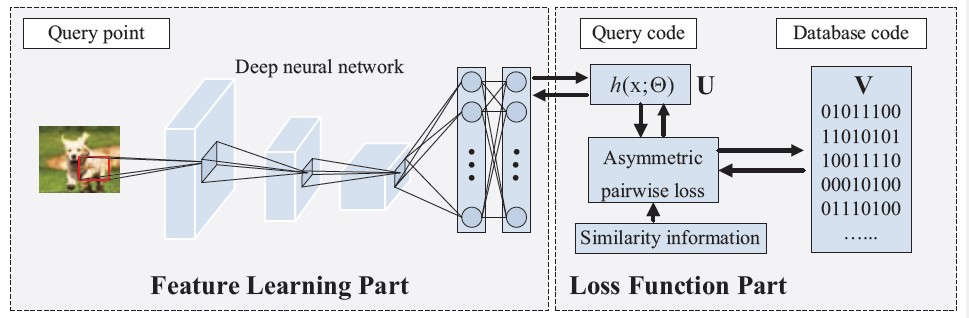

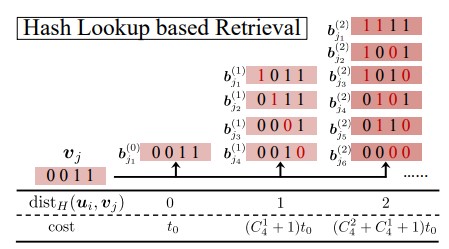

Asymmetric Deep Supervised Hashing. [pdf][github][poster]

Qing-Yuan Jiang, and Wu-Jun Li.

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI). 2018

ABSTRACT BIB Learning2HashHashing has been widely used for large-scale approximate nearest neighbor search because of its storage and search efficiency. Recent work has found that deep supervised hashing can significantly outperform non-deep supervised hashing in many applications. However, most existing deep supervised hashing methods adopt a symmetric strategy to learn one deep hash function for both query points and database (retrieval) points. The training of these symmetric deep supervised hashing methods is typically time-consuming, which makes them hard to effectively utilize the supervised information for cases with large-scale database. In this paper, we propose a novel deep supervised hashing method, called asymmetric deep supervised hashing (ADSH), for large-scale nearest neighbor search. ADSH treats the query points and database points in an asymmetric way. More specifically, ADSH learns a deep hash function only for query points, while the hash codes for database points are directly learned. The training of ADSH is much more efficient than that of traditional symmetric deep supervised hashing methods. Experiments show that ADSH can achieve state-of-the-art performance in real applications.

-

-

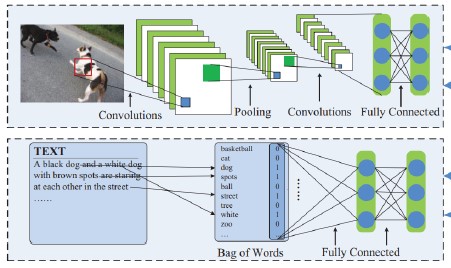

Deep Cross-Modal Hashing. [pdf][github][poster][video][slide]

Qing-Yuan Jiang, and Wu-Jun Li.

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (CVPR). Spotlight. 2017

ABSTRACT BIB Learning2Hash Cross-Modal RetrievalDue to its low storage cost and fast query speed, crossmodal hashing (CMH) has been widely used for similarity search in multimedia retrieval applications. However, most existing CMH methods are based on hand-crafted features which might not be optimally compatible with the hash-code learning procedure. As a result, existing CMH methods with hand-crafted features may not achieve satisfactory performance. In this paper, we propose a novel CMH method, called deep cross-modal hashing (DCMH), by integrating feature learning and hash-code learning into the same framework. DCMH is an end-to-end learning framework with deep neural networks, one for each modality, to perform feature learning from scratch. Experiments on three real datasets with image-text modalities show that DCMH can outperform other baselines to achieve the state-of-the-art performance in cross-modal retrieval applications.

-

-

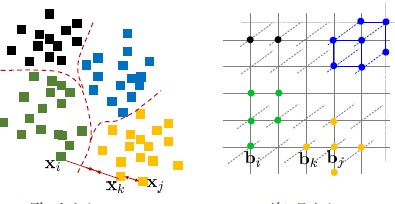

Scalable Graph Hashing with Feature Transformation. [pdf][github]

Qing-Yuan Jiang, and Wu-Jun Li.

Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI). 2015

ABSTRACT BIB Learning2HashHashing has been widely used for approximate nearest neighbor (ANN) search in big data applications because of its low storage cost and fast retrieval speed. The goal of hashing is to map the data points from the original space into a binary-code space where the similarity (neighborhood structure) in the original space is preserved. By directly exploiting the similarity to guide the hashing code learning procedure, graph hashing has attracted much attention. However, most existing graph hashing methods cannot achieve satisfactory performance in real applications due to the high complexity for graph modeling. In this paper, we propose a novel method, called scalable graph hashing with feature transformation (SGH), for large-scale graph hashing. Through feature transformation, we can effectively approximate the whole graph without explicitly computing the similarity graph matrix, based on which a sequential learning method is proposed to learn the hash functions in a bit-wise manner. Experiments on two datasets with one million data points show that our SGH method can outperform the state-of-the-art methods in terms of both accuracy and scalability

Pre-Print

-

-

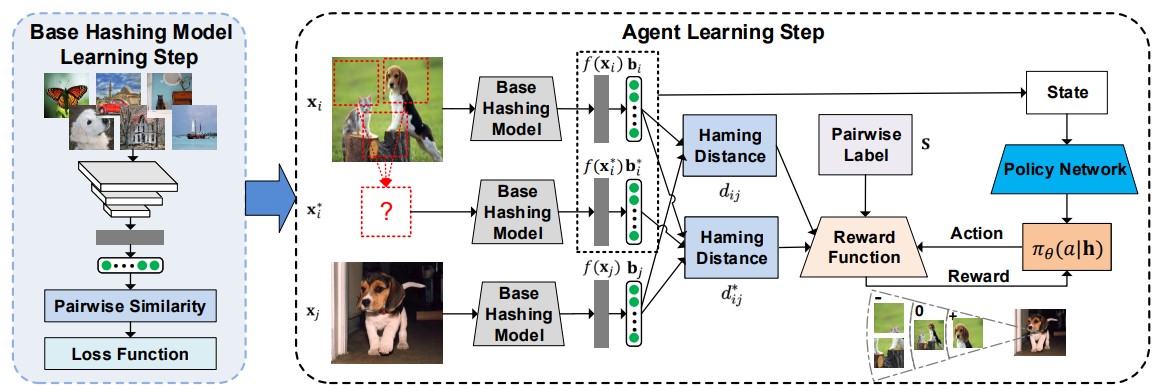

Deep Multi-Index Hashing for Person Re-Identification. [paper link]

Ming-Wei Li, Qing-Yuan Jiang, Wu-Jun Li

-

-

On the Evaluation Metric for Hashing. [paper link]

Qing-Yuan Jiang, Ming-Wei Li, Wu-Jun Li

-

-

Multiple Code Hashing for Efficient Image Retrieval. [paper link]

Ming-Wei Li, Qing-Yuan Jiang, Wu-Jun Li

-

-

Learning to Rebalance Multi-Modal Optimization by Adaptively Masking Subnetworks. [paper link]

Yang Yang, Hongpeng Pan, Qing-Yuan Jiang, Yi Xu, Jinhui Tang

-

-

Rethinking Multimodal Learning from the Perspective of Mitigating Classification Ability Disproportion. [paper link]

Qing-Yuan Jiang, Longfei Huang, Yang Yang

-

-

Rebalanced Multimodal Learning with Data-aware Unimodal Sampling. [paper link]

Qing-Yuan Jiang, Zhouyang Chi, Xiao Ma, Qi-Rong Mao, Yang Yang, Jinhui Tang

-

-

Multimodal Classification via Modal-Aware Interactive Enhancement. [paper link]

Qing-Yuan Jiang, Zhouyang Chi, Yang Yang